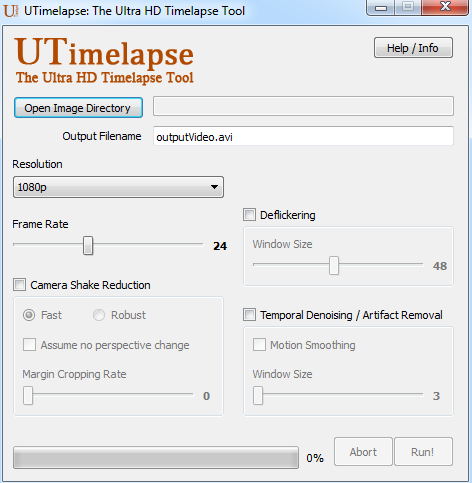

UTimelapse: a tool for creating high-quality timelapse videos

May 4, 2014UTimelapse is a tool that creates timelapse videos from a sequence of still images. It helps create high-quality videos without requiring high-end equipment. Its features include camera shake reduction, deflickering, and temporal smoothing.

Demo

Camera Shake Reduction

This feature detects and compensates for movements of the camera between consecutive frames by aligning the frames based on detected motion.

The app provides two options for this feature: fast and robust modes. In the fast mode, it estimates the optical flow between the consecutive frames and aligns the images based on that estimation. In the robust mode, it features more sophisticated algorithms (Scale Invariant Feature Transform, OpenCV FLANN-based matcher, and Random Sample Consensus algorithms) to detect and compensate for camera shake. While the robust mode is more computationally expensive, it is more reliable and accurate than the fast mode.

Both fast and robust modes compute a homography matrix and use it to warp the images to a common reference frame. To avoid doing alignment during scene changes, the app first checks if the determinant of the homography matrix is very close to zero or too large. If so, it assumes that there are no valid point correspondences between consecutive frames and skips the alignment.

The app also provides an option to zoom to crop margins.

Deflickering

This feature works as a brightness and contrast normalizer that helps reduce the frame-to-frame illumination and contrast fluctuations. The app has two options for this feature: global and local deflickering.

In the global deflickering option, the app computes the mean and standard deviation of pixel intensity values for each frame and keeps them in circular buffer arrays. The circular buffers work as running average filters on the mean and standard deviation of the intensity values and are used to smooth out global brightness and contrast fluctuations.

The local mode stores the discrete cosine transform (DCT) coefficients of the consecutive frames in a circular buffer, instead of their mean and standard deviation. It blends those coefficients to smooth out local variations in image brightness, such as cloud shadows. This mode is experimental and not available in the stable branch of the app.

Temporal Smoothing

This feature smooths frames temporally to attenuate jitter and spatial noise using temporal median and Gaussian filters.

I should note that most of the methods that I used here are well-known methods, so I do not claim novelty for the most part. The DCT-based deflicker, on the other hand, is a novel approach to the best of my knowledge.